Hierarchical Reinforcement Learning and Value Optimization for Challenging Quadruped Locomotion

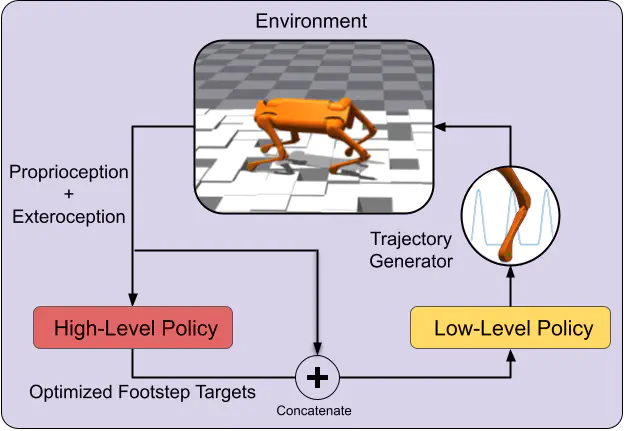

The proposed planning and control architecture

The proposed planning and control architectureAbstract

We propose a novel hierarchical reinforcement learning framework for quadruped locomotion over challenging terrains. Our approach incorporates a two-layer hierarchy where a high-level planner (HLP) selects optimal goals for a low-level policy (LLP). The LLP is trained using an on-policy actor-critic RL algorithm and is given footstep placements as goals. The HLP does not require any additional training or environment samples, since it operates via an online optimization process over the value function of the LLP. We demonstrate the benefits of this framework by comparing it against an end-to-end reinforcement learning (RL) approach, highlighting improvements in its ability to achieve higher rewards with fewer collisions across an array of different terrains.